Introduction

I recently seized the opportunity to acquire a good-condition IBM zSeries 890 computer, which I knew was taken good care of (including careful de-installation). This is a model that was sold from April of 2004 up until June of 2008. It is a newer model of the series of computers that IBM initially released with the IBM System/360 back in 1964. The instruction set and system features have had some changes and additions in that timeframe, but in the end, most user programs written for the S/360 Model 30 in 1964 still run without any changes on the current systems that are being sold, such as the IBM zEnterprise 196 (though changes have required updates to systems level software, such as operating systems).

Major landmarks are IBM S/360 introduction in 1964 (with a 24-bit address space), Virtual memory becoming standard on S/370 in 1972, 31-bit address space with S/370-XA in 1981, multiple CPU/SMP systems in 1973, LPAR (logical partitions) in 1980, S/390 with ESCON (10 and later 17 MByte/s fiber-based peripheral attachment), CMOS-based CPUs with the S/390 G3 in the 1990s, and 64-bit architecture in 2000 with the IBM z900. More information about all of these is available via google.

System Details

The system is in a wide, heavy rack, about 30" wide and 76" tall, and about 1500lbs, according to IBM. This is a typical weight and size for an IBM rack of equipment (similar to older IBM S/390 and RS/6000 SP systems). Inside the rack, starting at the top are the rack-level power supply, processor and memory cabinet (CEC), and at the bottom, the I/O card enclosure. On a set of fold-out arms on the front side of the machine are the Service Element (SE) laptops, which are sort of a service processor - they manage loading firmware into the system, turning power on and off, configuring the hypervisor for LPARs, accessing the system consoles, and other low-level system tasks. They manage features such as Capacity Upgrade on Demand (CUoD), Capacity Backup, limiting the hardware to what you have actually paid for, and are serial number locked to the particular machine they're connected to.

My machine is a 2086-A04 (which is common across all z890 systems), and configured as a 2086-140, with two dual-port fiber Gigabit Ethernet adapters, two dual-port 2Gb Fiberchannel/FICON adapters, and two 15-port ESCON adapters. In addition, the SE laptops connect to the outside world using a pair of 10/100 PCMCIA ethernet adapters. Earlier systems used Token Ring instead... I'm happy to not have to deal with Token Ring for this anymore.

Central Electronics Complex - CPU and Memory

IBM mainframes are given a speed rating, originally in MIPS, and now in MSU, which is used to price software that runs on the mainframe. Mine is rated 110 MIPS, and 17 MSU. By comparison, a full speed CPU is rated 366 MIPS and 56 MSU, which is the speed of my IFL. The z890 came in 28 different speed increments (1-4 CPUS x 1-7 speed ratings) from 26 MIPS / 4 MSU to 1365 MIPS / 208 MSU, to closely match the amount of system speed you required, which helps keep your software costs down.

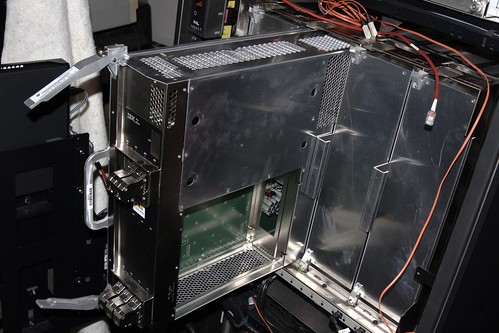

Processor book, with memory removed

The z890 is actually nearly the same as a z990 system, with the exception that it has only one processor book (out of 4 that can fit into a one-rack z990 system), and only scales to 4 user-accessible cores (there is one additional core, called a "System Assist Processor" or SAP, which is dedicated to doing system I/O handling). The z890 can scale up to 56 total processor cores.

System memory module - 8GB

The system has 8GB of RAM, which is a custom module that plugs into the processor "book". The memory is available in 8GB increments up to 32GB of ram. The 24GB option, as there is only one RAM slot, is actually implemented as a 32GB module with 8GB "turned off" by IBM. As you will learn by working with these systems for a bit, IBM has an annoying habit of charging you to turn on parts of the system which you already own, but haven't paid to unlock yet.

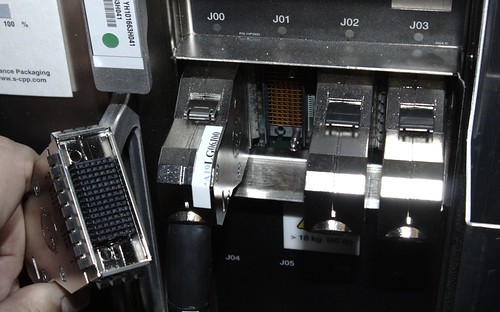

STI cables and connectors

Each processor book (which is only one for the z890) has 8 "STI" (Self-Timed Interface) links, which connect the CPU and memory to I/O adapters. In the z890, these links run at 2GBps (16Gbps). There are 7 that you can use in a fully configured system for I/O cards, and you can also use the remaining one (or more) to network (couple) systems together to build a system that is more redundant. With the apporpriate hardware, you can run these coupling links (but not at the full 2GBps) up to 100km, creating as IBM calls it, a "geographically-diverse" system, which can help provide for a disaster-recovery system link.

Peripherals

Two things that I appreciate about this system compared to past systems are the ethernet on its SE (which also lets me boot the system from an FTP server on its network), and SCSI over Fiberchannel support, which lets me use standard FC raid arrays as storage for a Linux system.

IBM 3174 Terminal Controllers and 3483 Terminal

In addition, the FICON can act as a system channel (like ESCON or Bus & Tag) to appropriate peripherals, at speeds of up to about 200MBps. It also has ESCON, which is a more traditional connection, and allows up to 17MBps connection to peripherals. Parallel Channel (aka S/370 Channel, or Bus & Tag) peripherals can be hooked up using a protocol converter such as the IBM 9034. This will let me connect my 3480 cartridge-style Channel-Attached tape drives, 3174-21L 3270-style terminal controllers, and eventually 3420-8 vacuum-column 9-track tape drives, to the system.

IBM 9034 Escon to Bus & Tag Converter

To Be Continued...

Future posts will document installation and setup of Debian GNU/Linux, and peripherals.